No, I said potatoes. Not tomatoes.

No, I said potatoes. Not tomatoes.

Voice technology is quickly seeping into our daily lives and Grocery Gateway is not late to explore the opportunities that come with it. By collaborating with my UX post-graduate program, their UX team knew they would get a lot of insight into how this new technology could impact their business while giving us students a great opportunity to explore the new and exciting world of voice user interfaces.

Our voices are diverse and complex. We have accents, use local slang, and sometimes the sentence that leaves our mouth doesn’t quite reflect the thought behind it. This project was aimed at exploring solutions that would give us insights into what kind of language people use when managing and ordering groceries online, and whether the voice component could enhance or facilitate the process in any way.

1. What are the advantages and disadvantages of using a voice user interface (VUI) when shopping online?

2. What does natural language sound like when ordering and managing groceries?

3. How does a multimodal experience (VUI + GUI) compare to one that is voice only?

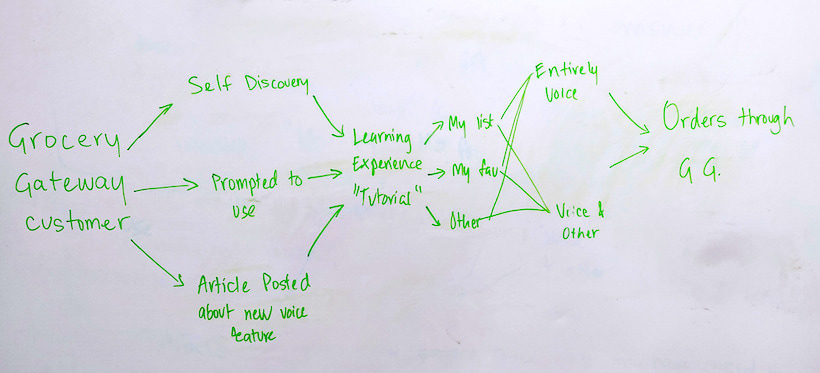

To get a better understanding of the customer journey from start to finish, and which parts of it we should focus on, we sketched out a quick map over different scenarios a customer could potentially go through when ordering from the Grocery Gateway website.

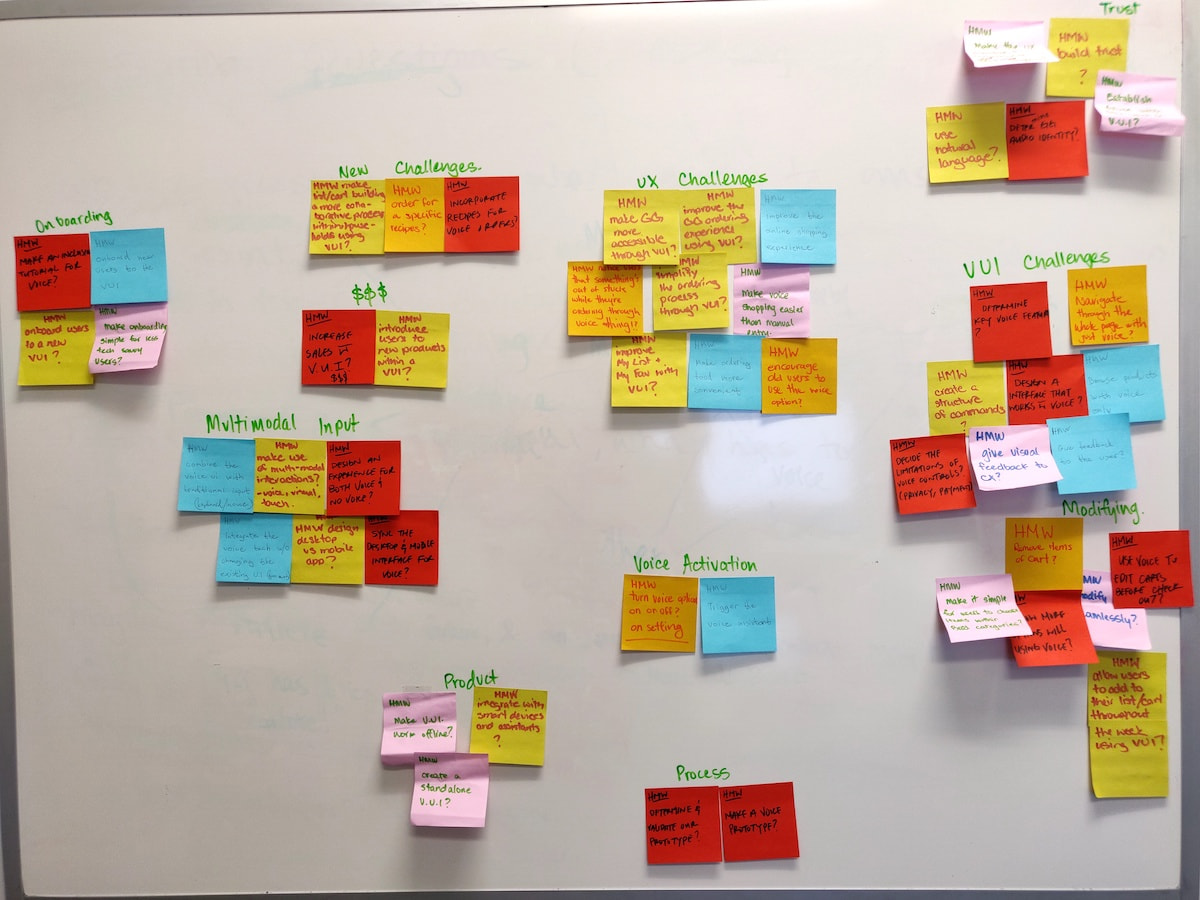

After deciding on which parts of the customer journey we wanted to focus on, we started writing down ideas and potential solutions on post-it notes.

To better frame our thinking, we used the "How Might We" methodology from the Google Ventures design sprint process. With the help of sticky dots, each team member voted on the ideas they thought were worth pursuing. Having taken on the role as the design sprint “Decider” (and being armed with three sticky dots instead of just one), I had the final say in which direction we should be going next.

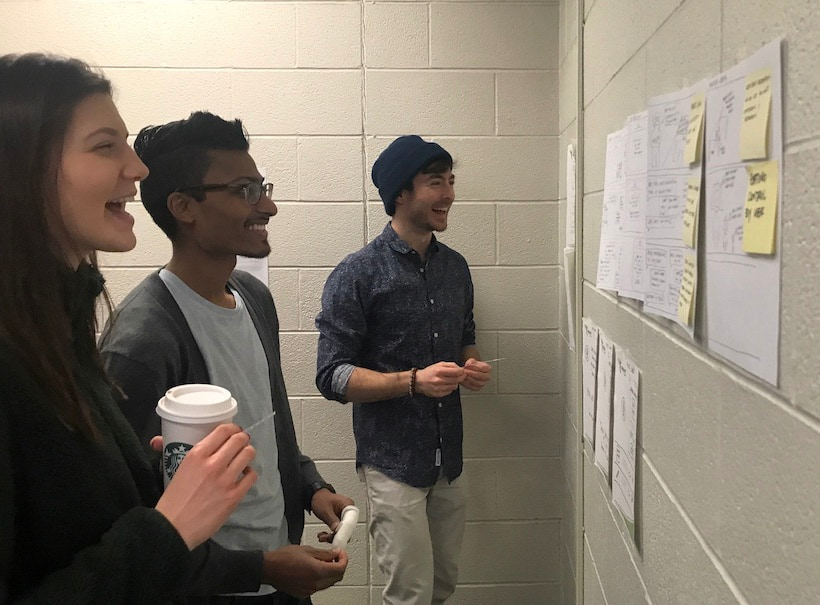

After our voting session it was clear which areas we wanted to focus on. We started sketching out our own ideas around different scenarios and then hung up our sketches on a wall for all group members to view.

We brought out the sticky dots again and started placing them on areas in the sketches that we found interesting. The goal was to narrow it down to two scenarios that we would prototype:

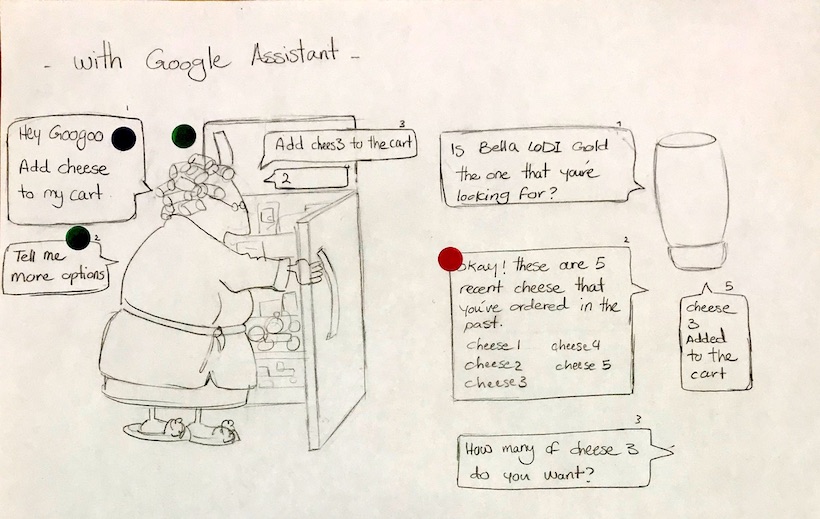

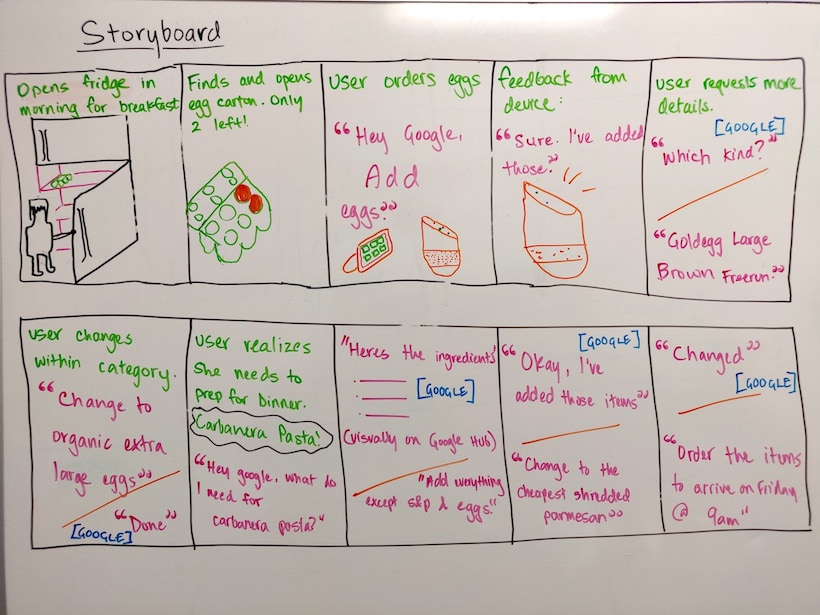

The first sketch shows a voice-only experience where someone is ordering items through their Google Assistant while looking through their fridge. The second sketch explores an experience where someone is adding and modifying items in a cart through a multimodal interface.

We dove a little deeper into our sketches above by drawing a storyboard which also explores a recipe requesting scenario.

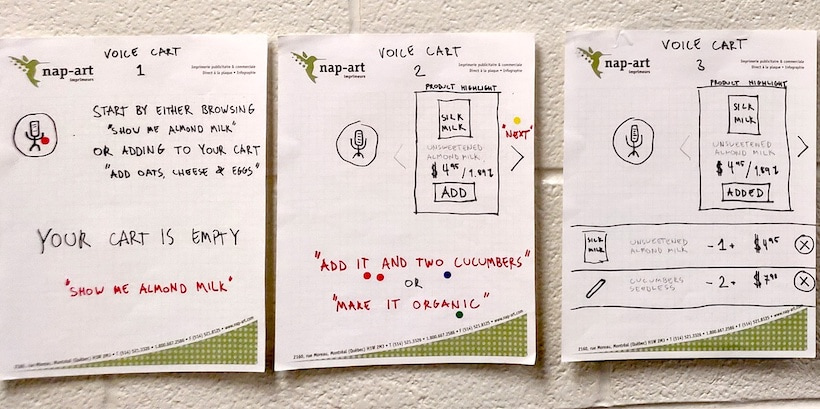

At this stage we had a pretty good understanding of what we wanted to prototype, and why. Our goal was to find out how people use their voice and what kind of language they would use when, for example, adding items to their cart, modifying items (choosing an organic alternative, changing the quantity), or how they might respond when an item would be out of stock. To validate the assumptions we had made throughout the project so far, we created two prototypes.

Our first prototype (as seen above) was voice-only and consisted of a box with a phone and an Arduino inside it. The Arduino had a sound sensor and some LED's that would light up whenever our "assistant" was speaking — just to create a more realistic effect. The phone was on a call (on speaker) with one of us on the other end acting as a voice assistant.

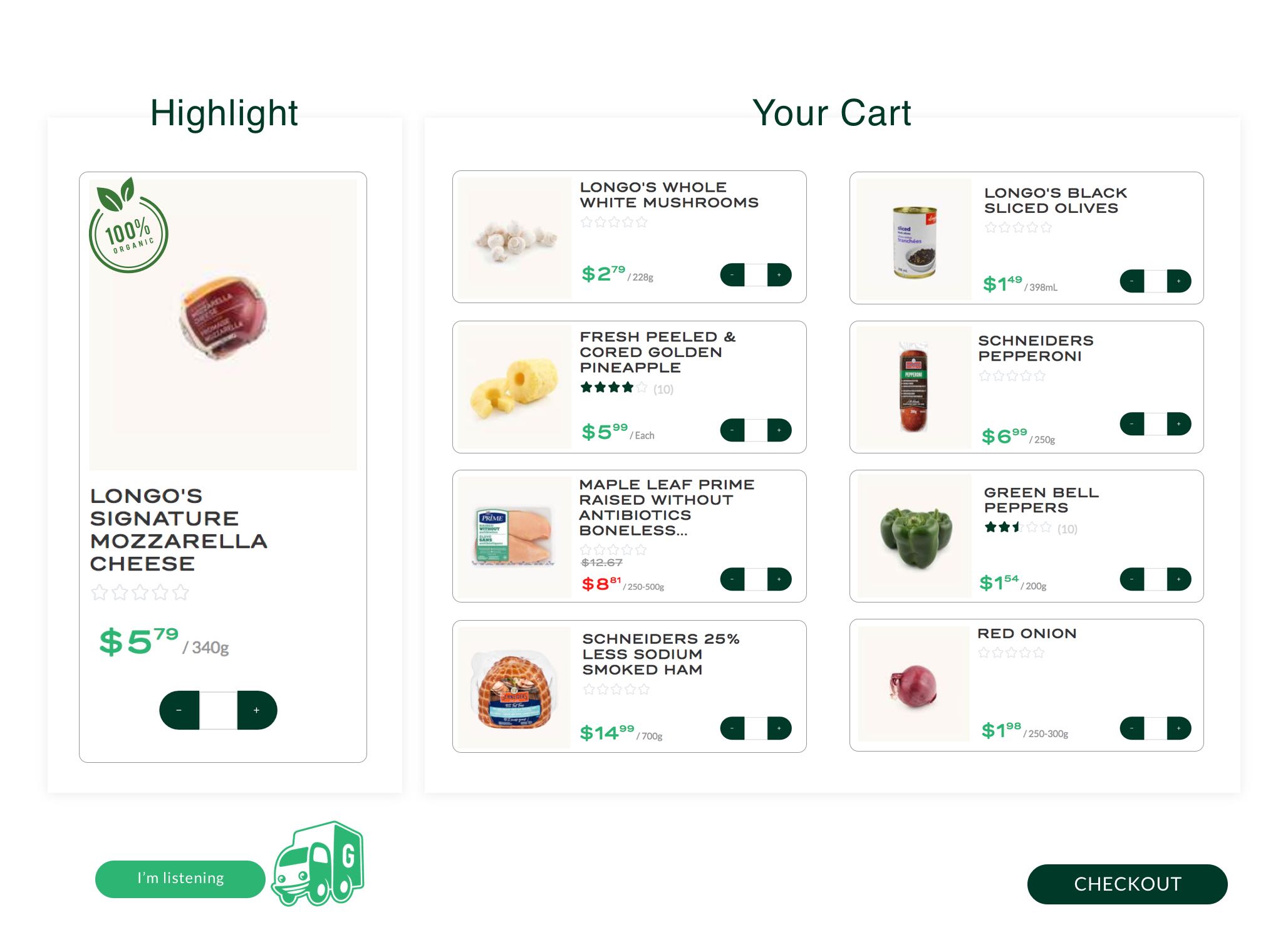

Our second prototype was a combination of a voice interface and a graphical interface. We assumed pretty early on that some kind of visual feedback would be helpful when managing your groceries with your voice, but we didn't know how helpful it would be or if it would make any sense at all. Since few players on the market are currently doing anything similar, it made this into a very interesting challenge to explore.

To create the multimodal experience we initially wanted to use a prototyping tool that has voice functionality built in (like Adobe XD) but we realized that these tools didn't have the support that we needed to validate all of our assumptions. To get around this, we decided that I would code a prototype from scratch using JavaScript and the Google Chrome speech recognition API. You can find the code on my GitHub.

For our graphical interface we designed a representation of your cart with a list of all the items in it, along with an additional more experimental feature where you could see a highlighted product that made it easier to visualize item customization. The idea was that the item you're currently modifying would be in focus while also adding some additional information about it.

The item in the highlighted area enabled our participants to use simple language like “make it organic”, or “double the quantity”, or “change the brand to [brand]”, and then the highlighted item would be the target of that modification. When our participants were finished modifying the item they would move on to the next item and so forth.

We conducted usability tests with a total of 5 participants. Every test included an introduction with warm-up questions, 2 scenarios (one for each prototype), and follow-up questions. The moderator script for our user interviews along with our scenarios can be found here.

I’d like to be able to say 5 items at once, instead of just one at a time.

All participants wanted to be able to order their groceries in one speaking instance, rather than list one item at a time.

Since I can't see my changes, it's difficult for me to trust the voice assistant...

In our first voice-only scenario, participants weren't always aware when the google assistant made mistakes and incorrect items were often added to the cart.

I’m big on visuals. I need to see what I’m trying to buy.

By pairing a voice interface with visual feedback, we found that the combination created a much more efficient and intuitive grocery shopping experience than just a single-modal interface.

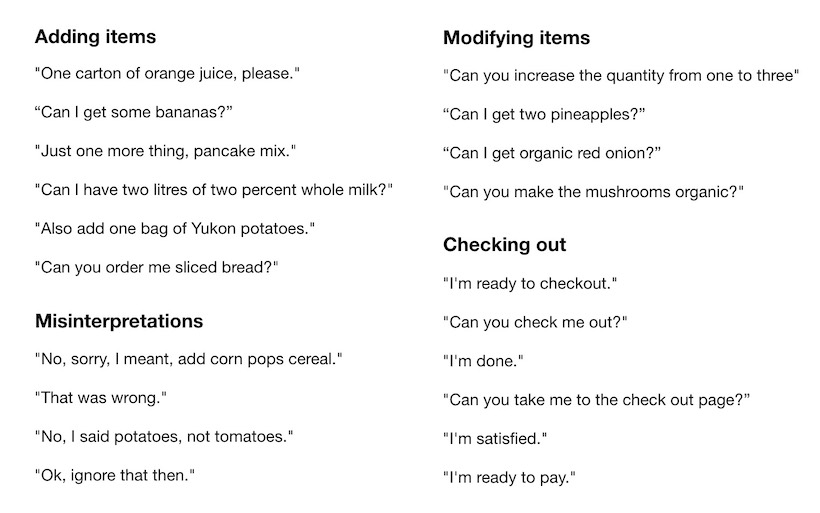

Since the main goal of our usability testing was to see how people use their own natural language, here’s a few examples of how our participants talked to the prototype. It was interesting to see how everyone had their own unique way of saying things.

The race to provide voice-enabled retail experiences has begun, and this project provided a good introduction to the design challenges that lie ahead. Something that quickly became clear during this project was that doing something as complex as modifying a shopping cart filled with items could become rather cumbersome and confusing without any visual feedback. By actually seeing the items in the cart and the changes made to them in real-time, our participants reported having a much better sense of overview and trust of what would actually be ordered once they checked out.